Advanced Scaling

Enterprise-grade scaling configurations and automation strategies.

Auto-Discovery Configuration

Cluster Autoscaler Integration

Enable automatic NodeGroup discovery using tag-based configuration:

--node-group-auto-discovery=vcloud:autoscaler.k8s.io.infra.vnetwork.dev/enabled=true

Configuration Components:

- Provider:

vcloud- Specifies vCloud infrastructure provider - Tag Key:

autoscaler.k8s.io.infra.vnetwork.dev/enabled - Tag Value:

true- Enables auto-discovery for tagged NodeGroups

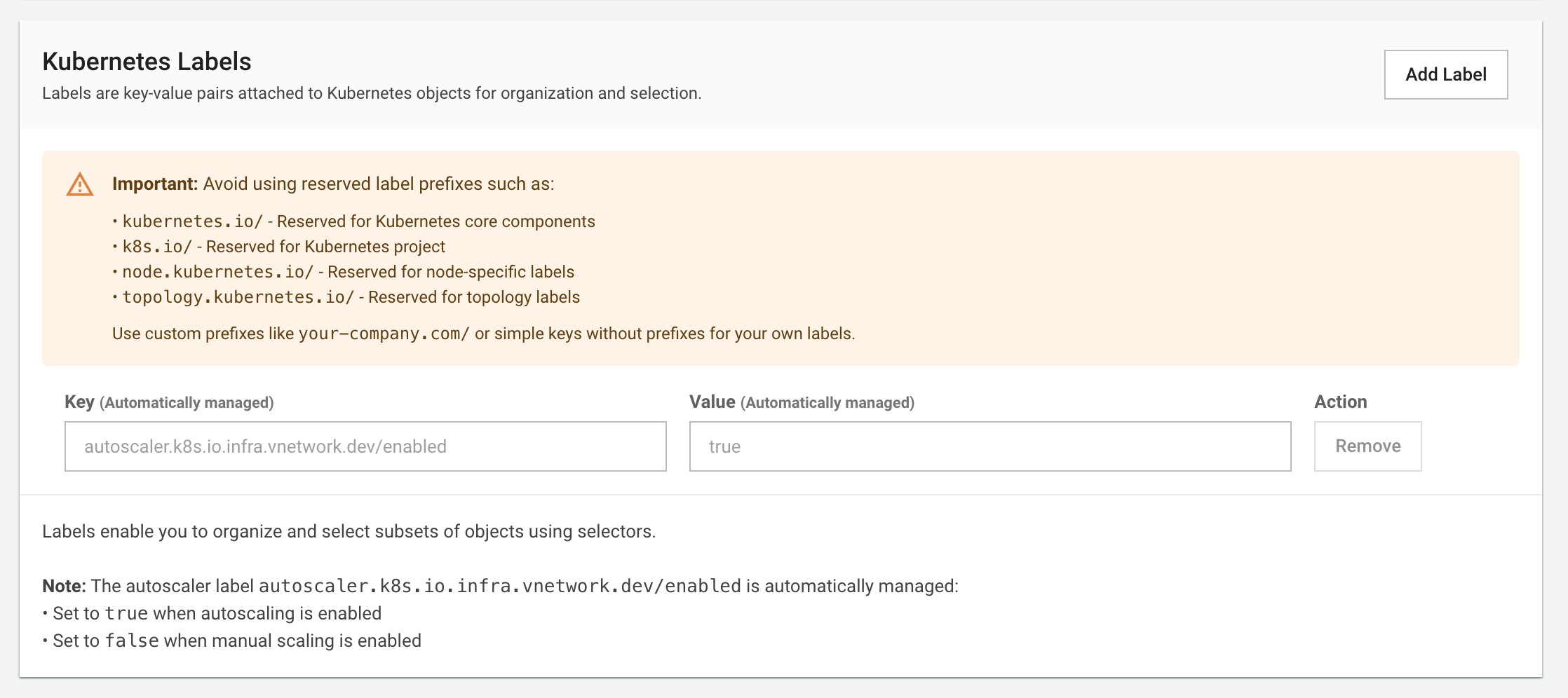

Kubernetes labels showing autoscaler configuration with automatically managed label

Kubernetes labels showing autoscaler configuration with automatically managed label

Benefits:

- Automatic NodeGroup detection by cluster autoscaler

- Eliminates manual NodeGroup configuration

- Dynamic scaling based on resource demand

- Simplified autoscaler setup and maintenance

Note: The autoscaler label autoscaler.k8s.io.infra.vnetwork.dev/enabled is automatically managed:

- Set to

truewhen autoscaling is enabled - Set to

falsewhen manual scaling is enabled

Multi-Pattern Discovery

# Environment-based discovery

--node-group-auto-discovery=vcloud:autoscaler.k8s.io.infra.vnetwork.dev/environment=production

# Workload-specific discovery

--node-group-auto-discovery=vcloud:autoscaler.k8s.io.infra.vnetwork.dev/workload-type=compute-optimized

Multi-Dimensional Scaling

Horizontal Pod Autoscaler (HPA) Integration

Combined Scaling Approach:

- HPA manages replica count based on metrics

- Cluster autoscaler manages node count based on pod scheduling needs

- Coordinated operation for optimal resource utilization

HPA Configuration Example:

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: application-hpa

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: application-deployment

minReplicas: 3

maxReplicas: 100

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 70

- type: Resource

resource:

name: memory

target:

type: Utilization

averageUtilization: 80

Vertical Pod Autoscaler (VPA)

Resource Right-Sizing:

- Automatic resource recommendations based on usage patterns

- Optimal CPU and memory requests/limits

- Better resource utilization during scaling

- Cost optimization through accurate allocation

Custom Metrics and Triggers

Application-Specific Scaling

Business Metrics:

- Queue depth for message processing applications

- Response time for latency-sensitive services

- Request rate for traffic-based scaling

- Error rate indicators for resource stress

External Metrics:

- Database connection pool utilization

- Cache hit ratio performance

- Third-party API response times

- Business event-driven scaling

Predictive Scaling

Machine Learning-Based Scaling:

- Historical pattern analysis for recurring usage patterns

- Seasonal adjustments for daily, weekly, monthly cycles

- Trend analysis for long-term capacity planning

- Anomaly detection for unusual patterns

Advanced Verification

Multi-Layer Verification

Infrastructure Verification:

# Verify NodeGroup configuration

kubectl get nodegroup NODEGROUP_NAME -o yaml

# Check node labels and taints

kubectl get nodes --show-labels | grep NODEGROUP_SELECTOR

# Verify resource allocation

kubectl describe nodes | grep -A10 "Allocated resources"

# Check cluster autoscaler status

kubectl get configmap cluster-autoscaler-status -n kube-system -o yaml

Application Health Verification:

# Check pod distribution across nodes

kubectl get pods -o custom-columns=NAME:.metadata.name,NODE:.spec.nodeName,STATUS:.status.phase

# Verify service endpoints

kubectl get endpoints SERVICE_NAME -o yaml

# Check application metrics

kubectl top pods --sort-by=cpu

# Validate load balancer configuration

kubectl get services -o wide